-

Hanyu Li, Byung Hyung Kim, “Hierarchical Dynamic Local-Global-Graph Representation Learning for EEG Emotion Recognition,” IEEE Transactions on Instrumentation and Measurement (TIM), vol.74, no.2552014, 2025. IF:5.9, JCR Rank:7/79=8.2% in Instruments and Instrumentation. [code] [pdf] [link]

-

ChaeEun Woo, SuMin Lee, Soo Min Park, Byung Hyung Kim, “RecSal-Net: Recursive Saliency Network for Video Saliency Prediction”, Neurocomputing, vol.650, no.130822, 2025. [code] [pdf] [link]

-

Hyunwook Kang, Jin Woo Choi, Byung Hyung Kim, “Convolutional Channel Modulator for Transformer and LSTM Networks in EEG-based Emotion Recognition,” Biomedical Engineering Letters, vol.15, pp.749-761, 2025. [code] [pdf] [link]

-

HyoSeon Choi, Dahoon Choi, Netiwit Kaongeon, Byung Hyung Kim, “Detecting Concept Shifts under Different Levels of Self-awareness on Emotion Labeling,” 27th International Conference on Pattern Recognition (ICPR), pp.276-291, Dec, 2024. [code] [pdf] [link]

-

Hyunwook Kang, Jin Woo Choi, Byung Hyung Kim, “Cascading Global and Sequential Temporal Representations with Local Context Modeling for EEG-based Emotion Recognition,” 27th International Conference on Pattern Recognition (ICPR), pp.305-320, Dec, 2024. [code] [pdf] [link]

-

우채은, 이수민, 박수민, 최세린, 류제경, 김병형, “비디오 스윈 트랜스포머 기반의 향상된 Visual Saliency 예측” Journal of Korea Multimedia Society (멀티미디어학회논문지), vol.27, no.11, pp.1314-1325, Nov, 2024. [pdf] [link]

-

우채은, 최효선, 김병형, “다중 출력 예측을 적용한 EEG 기반 Valence-Arousal 회귀 모델” Journal of Biomedical Engineering Research (의공학회지), vol.45, no.5, pp.279-285, Oct, 2024. [pdf] [link]

-

방윤석, 김병형, “EEG 기반 SPD-Net에서 리만 프로크루스테스 분석에 대한 연구,” Journal of Biomedical Engineering Research (의공학회지), vol.45, no.4, pp.179-186, Aug, 2024. [pdf] [link]

-

Seunghun Koh, Byung Hyung Kim+, Sungho Jo+, “Understanding the User Perception and Experience of Interactive Algorithmic Recourse Customization,” ACM Transactions on Computer-Human Interaction (TOCHI), vol.31, no.3, 2024, +Co-Corresponding Author. [pdf] [link]

-

Kobiljon Toshnazarov, Uichin Lee, Byung Hyung Kim, Varun Mishra, Lismer Andres Caceres Najarro, Youngtae Noh, “SOSW: Stress Sensing with Off-the-Shelf Smartwatches in the Wild,” IEEE Internet of Things Journal (IoT-J), vol.11, no.12, pp.21527-21545, 2024. 2023 JCR IF:10.6, Rank:4/158=2.2% in Computer Science, Information Systems. [pdf] [link]

-

김승한+, 진태균+, 박혜민, 정희재, 김병형, “가상현실에서 짧은 신호 길이를 활용한 시간 영역 SSVEP-BCI 시스템 속도 향상,” 한국컴퓨터종합학술대회 (KCC), pp.1185-1187, Jun, 2024. +Co-first Authors. [pdf] [link]

-

육지훈, 김병형, “복소수 신경회로망 기반의 PPG 신호 복원 모델,” 한국컴퓨터종합학술대회 (KCC), pp.732-734, Jun, 2024. [pdf] [link]

-

최효선, 최다훈, 김병형, “EEG 기반 감정 분류에서 MSP를 사용한 OOD 검출 적용”, KIISE Journal (정보과학논문지), vol. 51, no. 5, pp.438-444, 2024. *Invited Paper(KCC 우수논문 초청)*. [pdf] [link]

-

강현욱, 김병형, “ConTL: CNN, Transformer 및 LSTM의결합을 통한 EEG 기반 감정인식 성능 개선”, KIISE Journal (정보과학논문지), vol. 51, no. 5, pp.454-463, 2024. [pdf] [link]

-

HyoSeon Choi, ChaeEun Woo, JiYun Kong, Byung Hyung Kim, “Multi-Output Regression for Integrated Prediction of Valence and Arousal in EEG-Based Emotion Recognition,” 12th IEEE International Winter Conference on Brain-Computer Interface, Feb, 2024. [code] [pdf] [link]

-

Yunjo Han, Kobiljon E Toshnazarov, Byung Hyung Kim, Youngtae Noh, Uichin Lee, “WatchPPG: An Open-Source Toolkit for PPG-based Stress Detection using Off-the-shelf Smartwatches,” Adjunct Proceedings of ACM International Joint Conference on Pervasive and Ubiquitous Computing (Ubicomp) & ACM International Symposium on Wearable Computing (ISWC), pp.208-209, Oct, 2023. [pdf] [link]

-

Netiwit Kaongoen, Jaehoon Choi, Jin Woo Choi, Haram Kwon, Chaeeun Hwang, Guebin Hwang, Byung Hyung Kim, Sungho Jo, “The future of wearable EEG: A review of ear-EEG technology and its applications,” Journal of Neural Engineering, vol.20, no.5, 2023. [pdf] [link]

-

Jaehoon Choi, Netiwit Kaongoen, HyoSeon Choi, Minuk Kim, Byung Hyung Kim+, Sungho Jo+, “Decoding Auditory-Evoked Response in Affective States using Wearable Around-Ear EEG System,” Biomedical Physics and Engineering Express, vol.9, no.5, pp.055029, 2023. +Co-Corresponding Author. [pdf] [link]

-

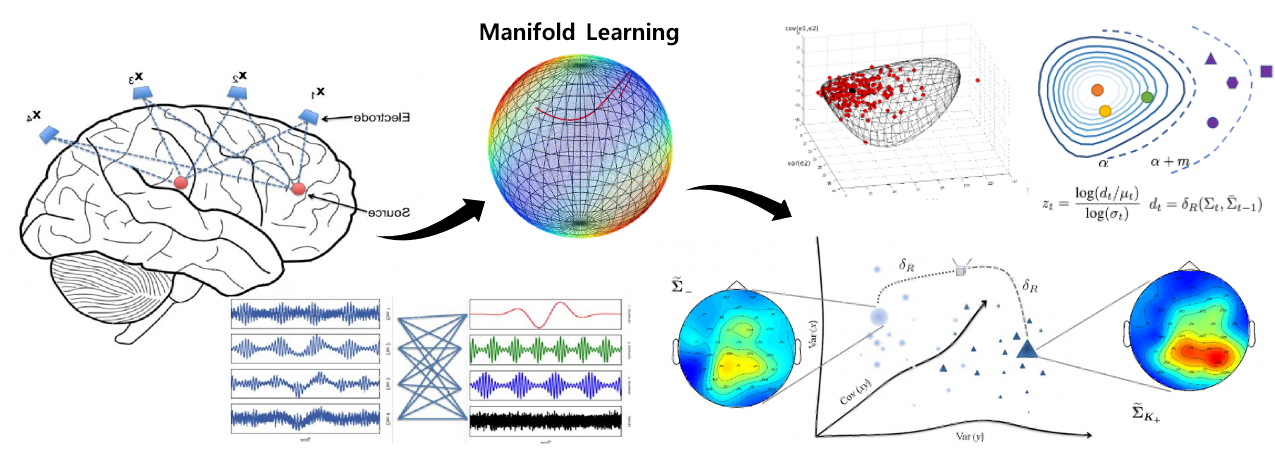

Byung Hyung Kim, Jin Woo Choi, Honggu Lee, Sungho Jo, “A Discriminative SPD Feature Learning Approach on Riemannian Manifolds for EEG Classification,” Pattern Recognition, vol. 143, no. 109751, 2023. 2022 JCR IF:8, Rank:30/275=10.7% in Engineering, Electrical & Electronic. [pdf] [link]

-

최다훈, 최효선, 육지훈, 김병형, “EEG 기반 감정 분류에서 OOD 검출 적용,” 한국컴퓨터종합학술대회 (KCC), pp.706–708, Jun, 2023. Oral Presentation (Acceptance < 28%). *우수논문상 (Top < 8% = 53/739)*.

-

육지훈, 주기현, 박영진, 김병형, “접촉 압력에 무관한 PPG 신호 추출 모델,” 한국컴퓨터종합학술대회 (KCC), pp.1151-1153, Jun, 2023.

-

김태훈, 백범성, 김성언, 이은정, 안태현, 김병형, “EEG 분류를 위한 와서스테인 거리 손실을 사용한 심층 표현 기반의 도메인 적응 기법,” 한국컴퓨터종합학술대회 (KCC), pp.1042–1044, Jun, 2023. Oral Presentation (Acceptance < 28%).

-

Jin Woo Choi, Haram Kwon, Jaehoon Choi, Netiwit Kaongoen, Chaeeun Hwang, Minuk Kim, Byung Hyung Kim, Sungho Jo, “Neural Applications Using Immersive Virtual Reality: A Review on EEG Studies,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol.31, pp.1645–1658, 2023. 2022 JCR IF:4.9, Rank:4/68=5.1% in Rehabilitation. [pdf] [link]

-

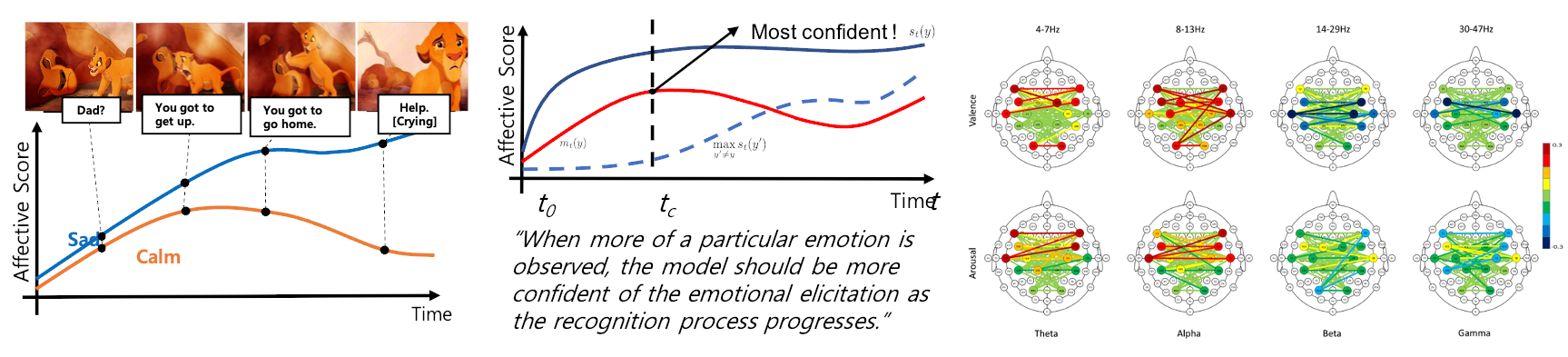

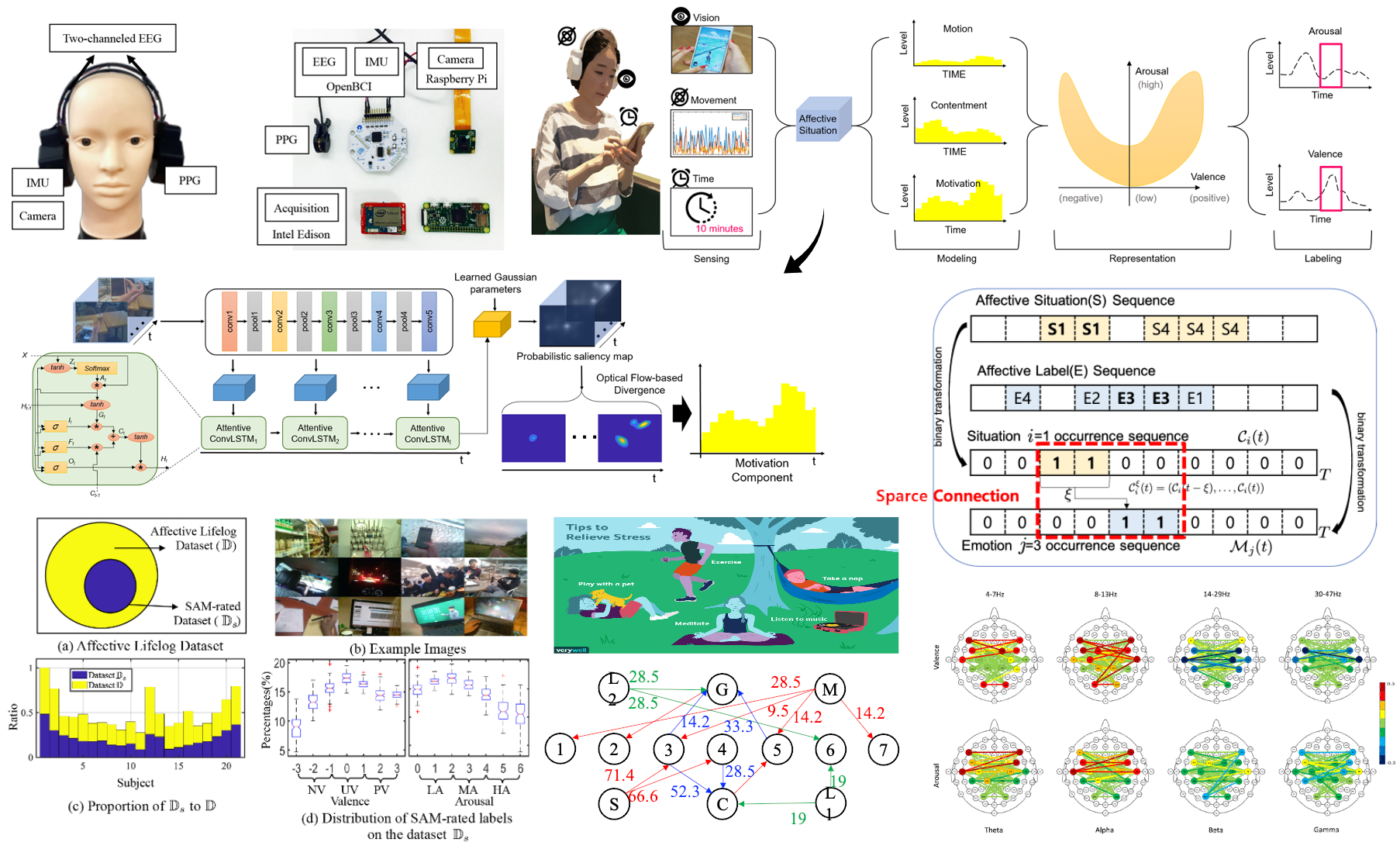

Byung Hyung Kim, Sungho Jo, Sunghee Choi, “ALIS: Learning Affective Causality behind Daily Activities from a

Wearable Life-Log System,” IEEE Transactions on Cybernetics, vol.52, no.12, pp.13212–13224, 2022. IF:19.118, JCR Rank:3/146=1.72% in Computer Science, Artificial Intelligence. [pdf] [link]

-

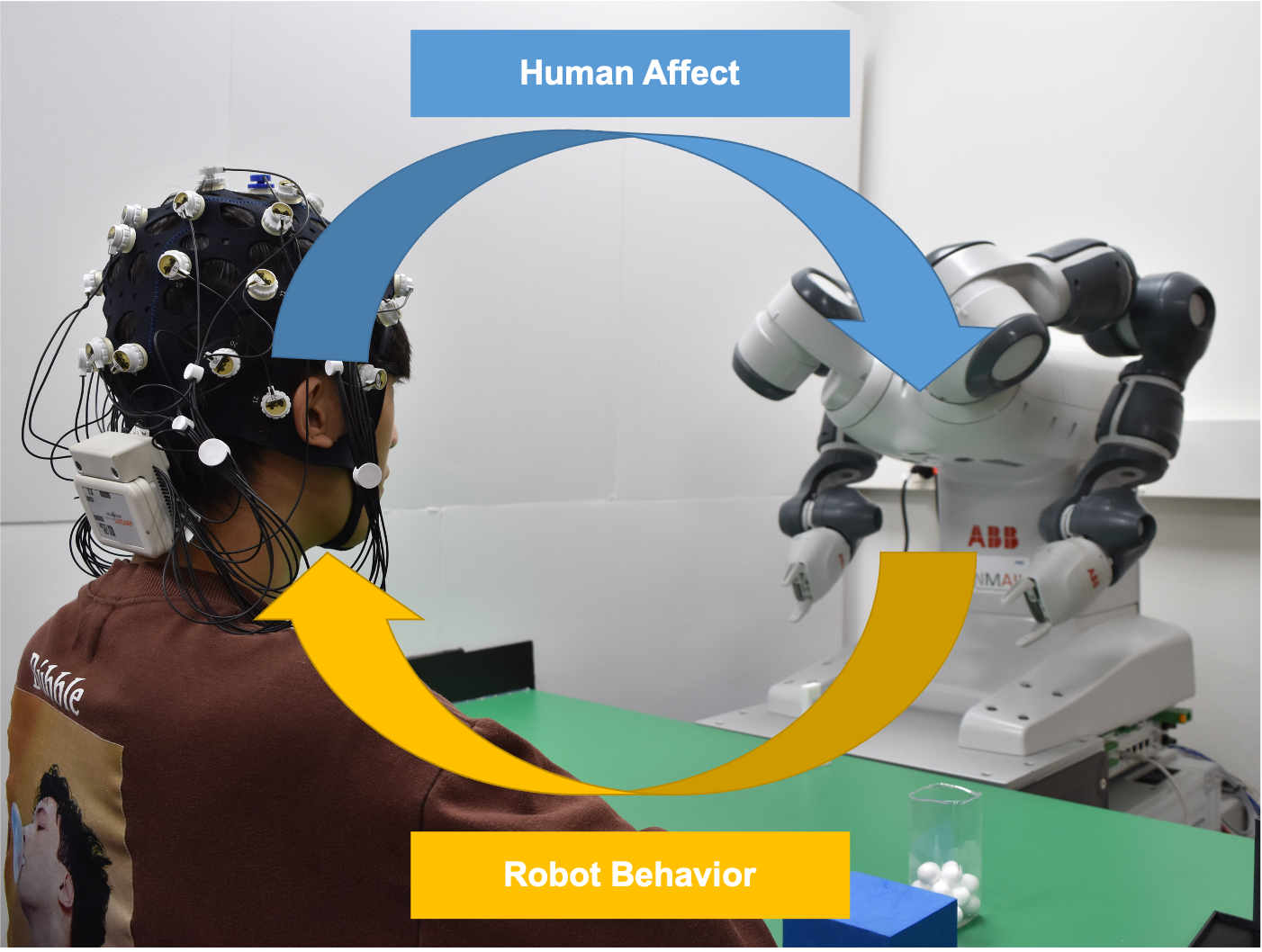

Byung Hyung Kim, Ji Ho Kwak, Minuk Kim, Sungho Jo, “Affect-driven Robot Behavior Learning System using EEG Signals for Less Negative Feelings and More Positive Outcomes,” IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4162-4167, Sep, 2021. [pdf] [link]

-

Yoon-Je Suh, Byung Hyung Kim, “Riemannian Embedding Banks for Common Spatial Patterns with EEG-based

SPD Neural Networks,” Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), vol.35, no.1, pp.854–862, Feb, 2021. Acceptance Rate=21.4%, Top-tier in Computer Science. Co-first Author. Corresponding Author. [pdf] [link]

-

Byung Hyung Kim, Yoon-Je Suh, Honggu Lee, Sungho Jo, “Nonlinear Ranking Loss on Riemannian Potato Embedding,” 25th International Conference on Pattern Recognition (ICPR), pp.4348-4355, Jan, 2021. [pdf] [link]

-

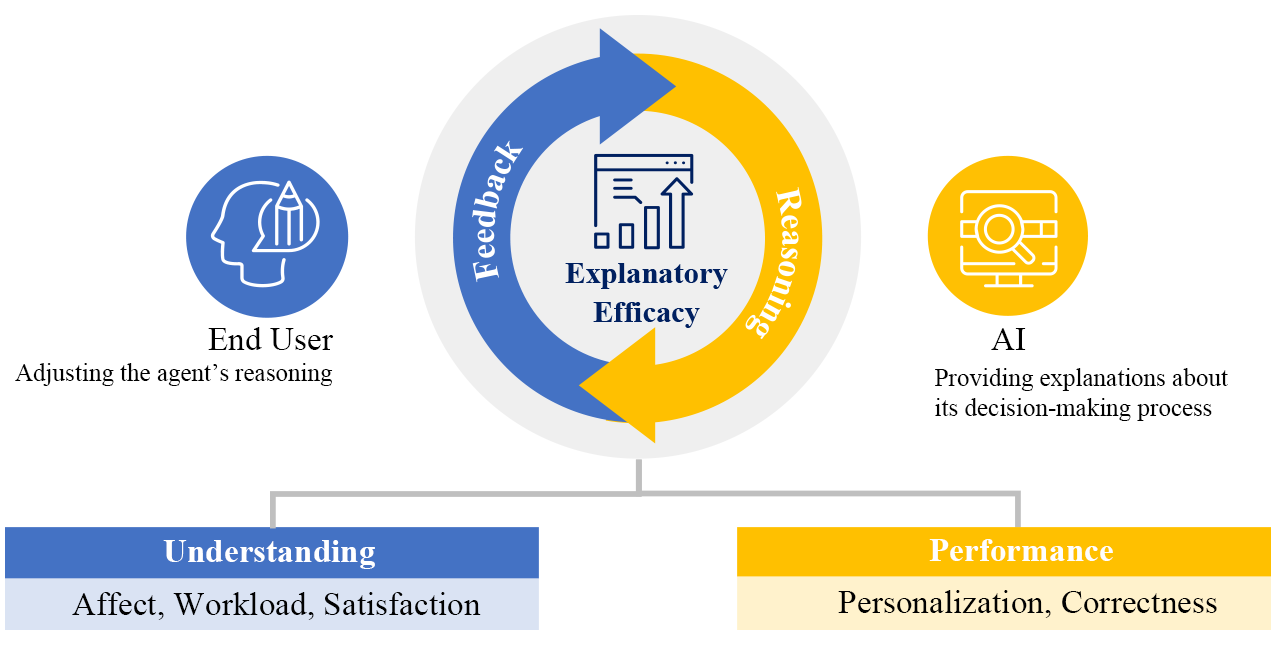

Byung Hyung Kim, Seunghun Koh, Sejoon Huh, Sungho Jo, Sunghee Choi, “Improved Explanatory Efficacy on Human Affect and Workload through Interactive Process in Artificial Intelligence,” IEEE Access, vol.8, pp.189013-189024, 2020. [pdf] [link]

-

Byung Hyung Kim, Sungho Jo, Sunghee Choi, “A-Situ: a computational framework for affective labeling from psychological behaviors in real-life situations,” Scientific Reports, vol.10, 15916, Sep, 2020. [pdf]

-

Jin Woo Choi, Byung Hyung Kim, Sejoon Huh, Sungho Jo, “Observing Actions through Immersive Virtual Reality Enhances Motor Imagery Training,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol.28, no.7, pp.1614-1622, 2020. 2019 JCR IF:3.340, Rank:7/68=9.56% in Rehabilitation. Co-first Author. [pdf]

-

Byung Hyung Kim, Sungho Jo, “Deep Physiological Affect Network for the Recognition of Human Emotions,” IEEE Transactions on Affective Computing, vol.11, no.2, pp.230-243, 2020. 2019 JCR IF:7.512, Rank:11/136=7.72% in Computer Science, Artificial Intelligence. [pdf]

-

Seunghun Koh, Hee Ju Wi, Byung Hyung Kim, Sungho Jo, “Personalizing the Prediction: Interactive and Interpretable Machine Learning,” 16th IEEE International Conference on Ubiquitous Robots (UR), pp.354-359, Jun, 2019.

-

Byung Hyung Kim, Sungho Jo, “An Empirical Study on Effect of Physiological Asymmetry for Affective Stimuli in Daily Life,” 5th IEEE International Winter Workshop on Brain-Computer Interface, pp.103–105, Jan, 2017.

-

Byung Hyung Kim, Jinsung Chun, Sungho Jo, “Dynamic Motion Artifact Removal using Inertial Sensors for Mobile BCI,” 7th IEEE International EMBS Conference on Neural Engineering, pp.37–40, Apr, 2015.

-

Byung Hyung Kim, Sungho Jo, “Real-time Motion Artifact Detection and Removal for Ambulatory BCI,” 3rd IEEE International Winter Workshop on Brain-Computer Interface, pp.70–73, Jan, 2015.

-

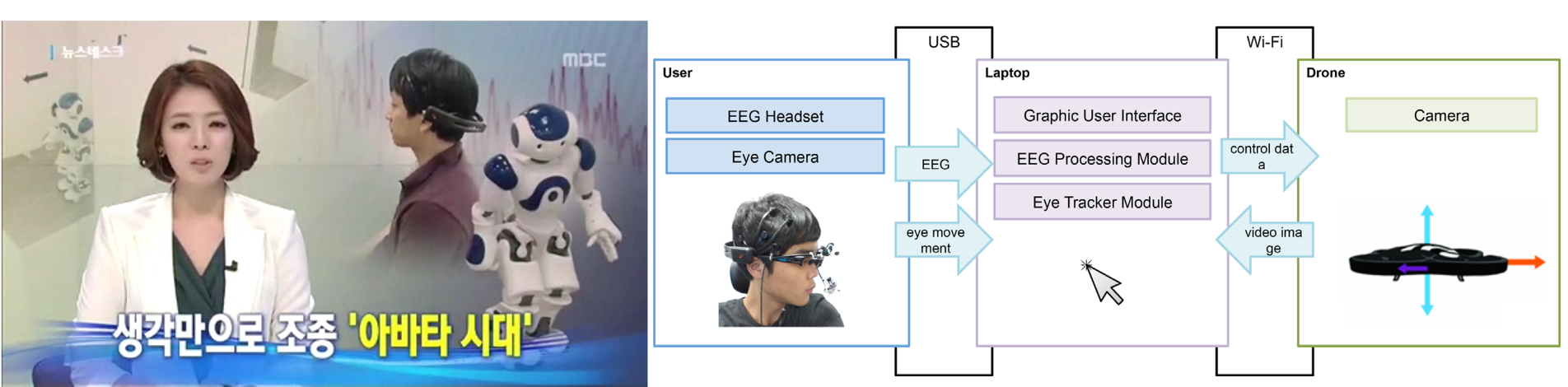

Minho Kim, Byung Hyung Kim, Sungho Jo, “Quantitative Evaluation of a Low-cost Noninvasive Hybrid Interface based on EEG and Eye Movement,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol.23, no.2, pp.159-168, 2015. 2014 JCR IF:3.972, Rank:3/65=4.61% in Rehabilitation.

-

Byung Hyung Kim, Minho Kim, Sungho Jo, “Quadcopter flight control using a low-cost hybrid interface with EEG-based classification and eye tracking,” Computers in Biology and Medicine, vol.51, pp.82-92, 2014. Honorable Mention Paper(Top 10%).

-

Mingyang Li, Byung Hyung Kim, Anastasios Mourikis, “Real-time Motion Tracking on a Cellphone using Inertial Sensing and a Rolling-Shutter Camera,” IEEE International Conference on Robotics and Automation (ICRA), pp.4712-4719, May, 2013.

-

Byung Hyung Kim, Hak Chul Shin, Phill Kyu Rhee, “Hierarchical Spatiotemporal Modeling for Dynamic Video Trajectory Analysis,” Optical Engineering, vol.50, no.107206, Oct, 2011.

-

Byung Hyung Kim, Danna Gurari, Hough O’Donnell, Margrit Betke, “Interactive Art System for Multiple Users Based on Tracking Hand Movements,” IADIS International Conference Interfaces and Human Computer Interaction (IHCI), Jul, 2011.

Our lab welcomes motivated and talented applicants regardless of race, ethnicity, religion, national origin, age, or disability status. We are deeply committed to fostering a collaborative, inclusive, and supportive research environment where all members can thrive and contribute meaningfully to our shared goals.

Our lab welcomes motivated and talented applicants regardless of race, ethnicity, religion, national origin, age, or disability status. We are deeply committed to fostering a collaborative, inclusive, and supportive research environment where all members can thrive and contribute meaningfully to our shared goals.